HCI:

Analytic Evaluation

Mick McQuaid

2025-03-27

Week TEN

Today

Q and A from last time

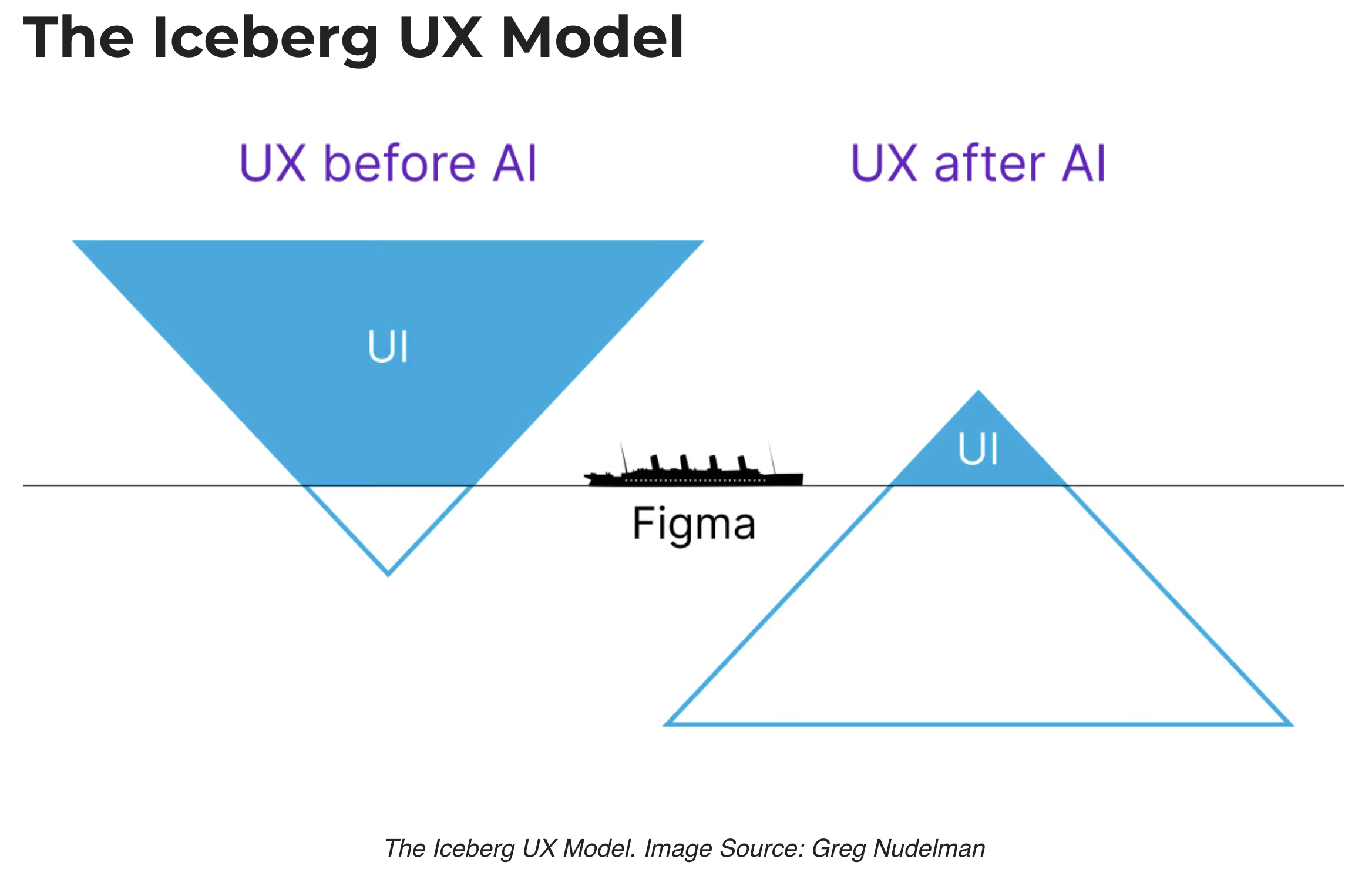

All the questions were about Figma!

Discussion

Design Critique

Julia

Article Presentation

Let’s look at Morris2025.pdf

Analytic Evaluation

Let’s look at HCI experiments

Readings

Readings last week include Hartson and Pyla (2019): Ch 22–24 Readings this week include Hartson and Pyla (2019): Ch 25–26

Assignments

Milestone 4

References

Hartson, Rex, and Pardha Pyla. 2019. The UX Book, 2nd Edition. Cambridge, MA: Morgan Kaufman.

END

Colophon

This slideshow was produced using quarto

Fonts are League Gothic and Lato